Subscribe to wiki

Share wiki

Bookmark

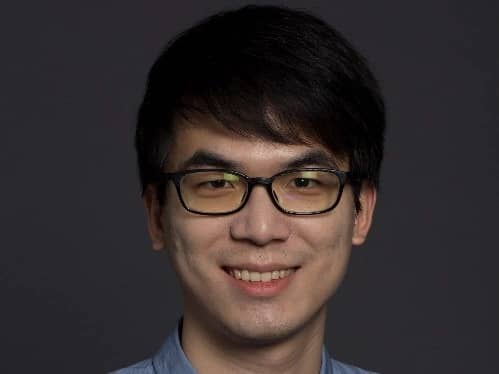

Bowen Cheng

The Agent Tokenization Platform (ATP):Build autonomous agents with the Agent Development Kit (ADK)

0%

Bowen Cheng

Bowen Cheng (程博文) is an artificial intelligence researcher at Meta's Superintelligence Lab. He specializes in multimodal foundation models and has contributed to significant AI projects at OpenAI, including GPT-4o, and Tesla's Full Self-Driving (FSD) software. [1] [2]

Education

Cheng received both his Bachelor of Science and his Ph.D. in Electrical and Computer Engineering (ECE) from the University of Illinois Urbana-Champaign (UIUC). During his doctoral studies, his advisors were Professor Alexander Schwing and Professor Thomas Huang. [1] [2] [4]

Career

As of 2025, Bowen Cheng is a researcher at Meta's Superintelligence Lab (MSL). He joined the newly formed group after a tenure at OpenAI, where he worked as a researcher on multimodal understanding and interaction. While at OpenAI, he was part of the post-training team focused on building multimodal models. Prior to OpenAI, Cheng was a Senior Research Scientist at Tesla, where he worked on the Autopilot team. Throughout his academic career, he completed several research internships at prominent technology labs, including Facebook AI Research (FAIR) in both New York City and Menlo Park, Google Research in Los Angeles, Microsoft Research in Redmond, and Microsoft Research Asia in Beijing. [1] [3] [2] [4] [5] [6]

Cheng has been a core contributor to several high-profile projects in the field of artificial intelligence. His work spans computer vision, autonomous driving, and large-scale multimodal models.

His notable contributions include:

- Meta Superintelligence Lab: Joined as a research scientist in a team assembled to focus on advanced AI research and development. [2]

- OpenAI:

- GPT-4o: Served as a core contributor, focusing on perception and the advanced voice mode, which featured significantly lower latency in audio interaction.

- Thinking with Images: Initiated research and was a foundational contributor to this project, which he described as a paradigm shift in solving perception problems.

- o3 and o4-mini: Acted as a core contributor to these models.

- GPT-4.1: Listed as a core contributor.

- OpenAI Audio API: Contributed research to the next-generation audio models. [1] [3]

- Tesla:

- Academic Research:

- Mask2Former: A universal image segmentation architecture.

- MaskFormer: An architecture for panoptic segmentation.

- Panoptic-DeepLab: A bottom-up approach for panoptic segmentation.

These projects highlight his work in segmentation transformers and multimodal systems. [1] [5] [6]

Research Interests

Cheng's primary research interest is in building real-time multimodal interaction systems. He aims to develop AI that can process streaming audio and video inputs to produce streaming audio and video outputs in real time. His vision for such systems includes features like an infinite context window for smooth interaction, advanced long-term memory capabilities, and the ability to stay updated with new information while proactively creating content. [1] [6] [5]

See something wrong?

The Agent Tokenization Platform (ATP):Build autonomous agents with the Agent Development Kit (ADK)