Subscribe to wiki

Share wiki

Bookmark

Stempoint

The Agent Tokenization Platform (ATP):Build autonomous agents with the Agent Development Kit (ADK)

0%

Stempoint

Stempoint is a distributed artificial intelligence (AI) infrastructure platform designed to provide a unified environment for AI development by integrating access to AI models with a decentralized network of GPU computing resources. The project aims to serve as a comprehensive solution for developers and enterprises by combining a multi-model API with on-demand computational power. [1] [2]

Overview

Stempoint is being developed to address challenges in the AI industry, such as fragmented access to various foundational AI models and the high cost or scarcity of high-performance GPU computing power. The platform's stated goal is to create a single, globally oriented interface for AI model training, fine-tuning, and inference. It seeks to achieve this by marshaling underutilized GPU resources from a global network of individual and institutional providers, making them accessible on demand. [3]

The platform's core design combines two primary components: an AI Agent Aggregation Layer and a hybrid compute infrastructure. The aggregation layer functions as a unified gateway, simplifying how developers interact with a wide range of leading AI models. The compute infrastructure utilizes a decentralized physical infrastructure network (DePIN) alongside a centralized elastic cloud to supply the necessary processing power for intensive AI tasks. This hybrid approach is intended to offer flexibility, scalability, and cost optimization for users. [4]

Ecosystem

AI Developers and Individual Builders

Access multiple foundation models and scalable GPU resources with minimal friction, generating API usage and compute demand that drives token utility and network activity.

Enterprises and AI Teams

Utilize large-scale compute resources for model training and deployment with unified billing and cost control, providing consistent, high-value demand that strengthens the Hash Forest and DePIN networks.

Algorithm and Model Providers

Convert proprietary AI models into accessible APIs for monetization, diversifying the platform’s offerings and increasing the overall volume of applications and model interactions.

GPU Compute Node Providers

Contribute idle or commercial GPU capacity to the network in exchange for token rewards, expanding the decentralized infrastructure and maintaining low-latency, elastic compute availability.

End-Application Consumers (B2B/B2C)

Engage with AI-powered products and services—such as chatbots, generative tools, and analytics platforms—creating real-world use cases that reinforce the ecosystem’s technical and economic foundation.

Web3 Investors and Governance Participants

Stake or hold tokens to participate in governance decisions and earn yields, adding liquidity and long-term stability to the ecosystem’s economic and decision-making framework. [13]

Key Features

Public and Private AI Infrastructure

Offers customizable on-premise and hybrid cloud clusters with managed operations, site optimization, and full observability. The system supports efficient energy use, cost control, and reliability for AI workloads. [6]

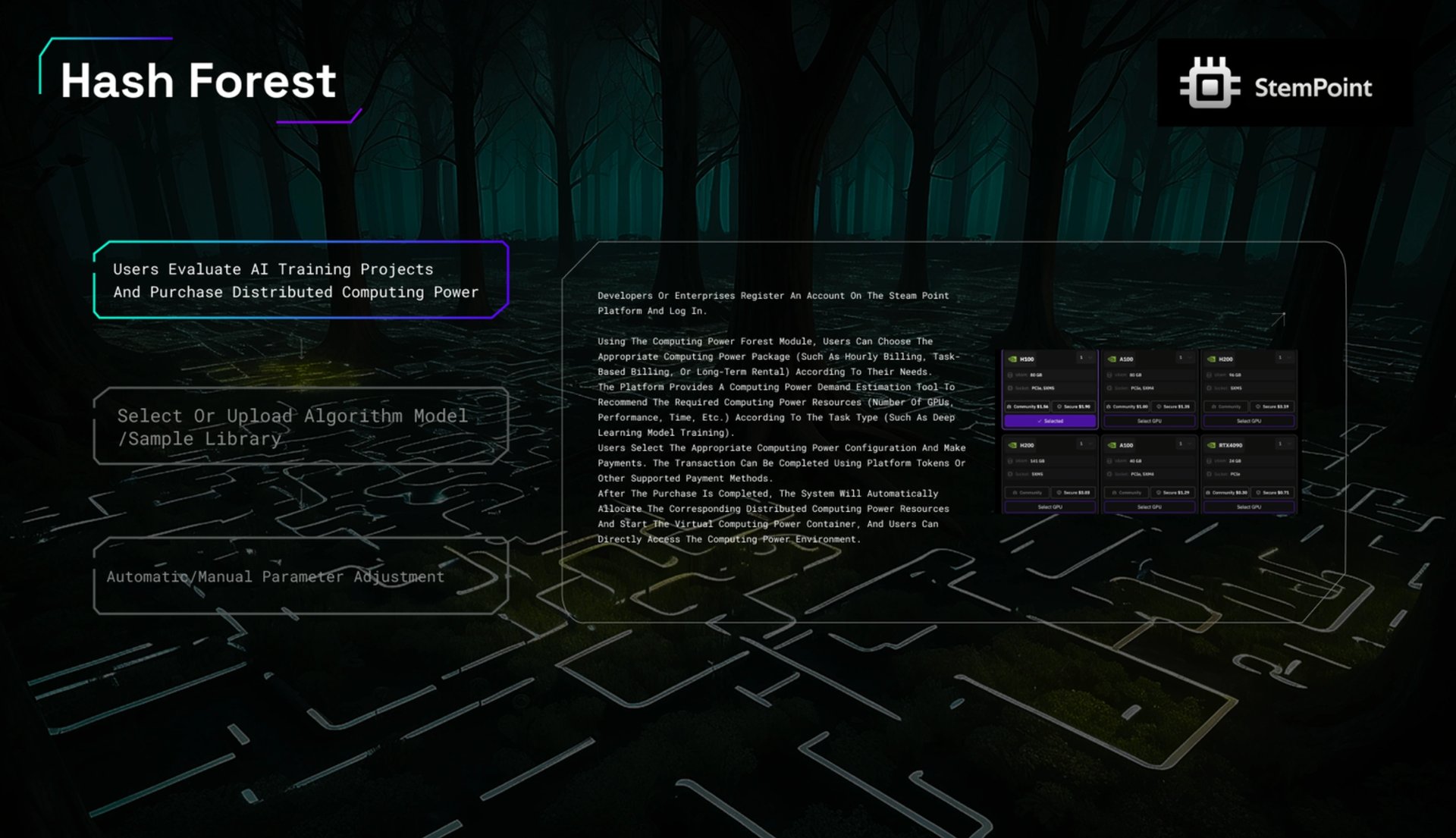

Hash Forest

An elastic GPU cloud network aggregating global idle GPUs for AI training and inference. It provides preconfigured environments with major AI frameworks and automated scheduling to optimize performance, latency, and cost. [7]

AI Computing Power Market

Standardizes compute resources into modular units for flexible rental. It includes tools for billing, performance reporting, and strategic routing based on latency, cost, or sovereignty requirements. [8]

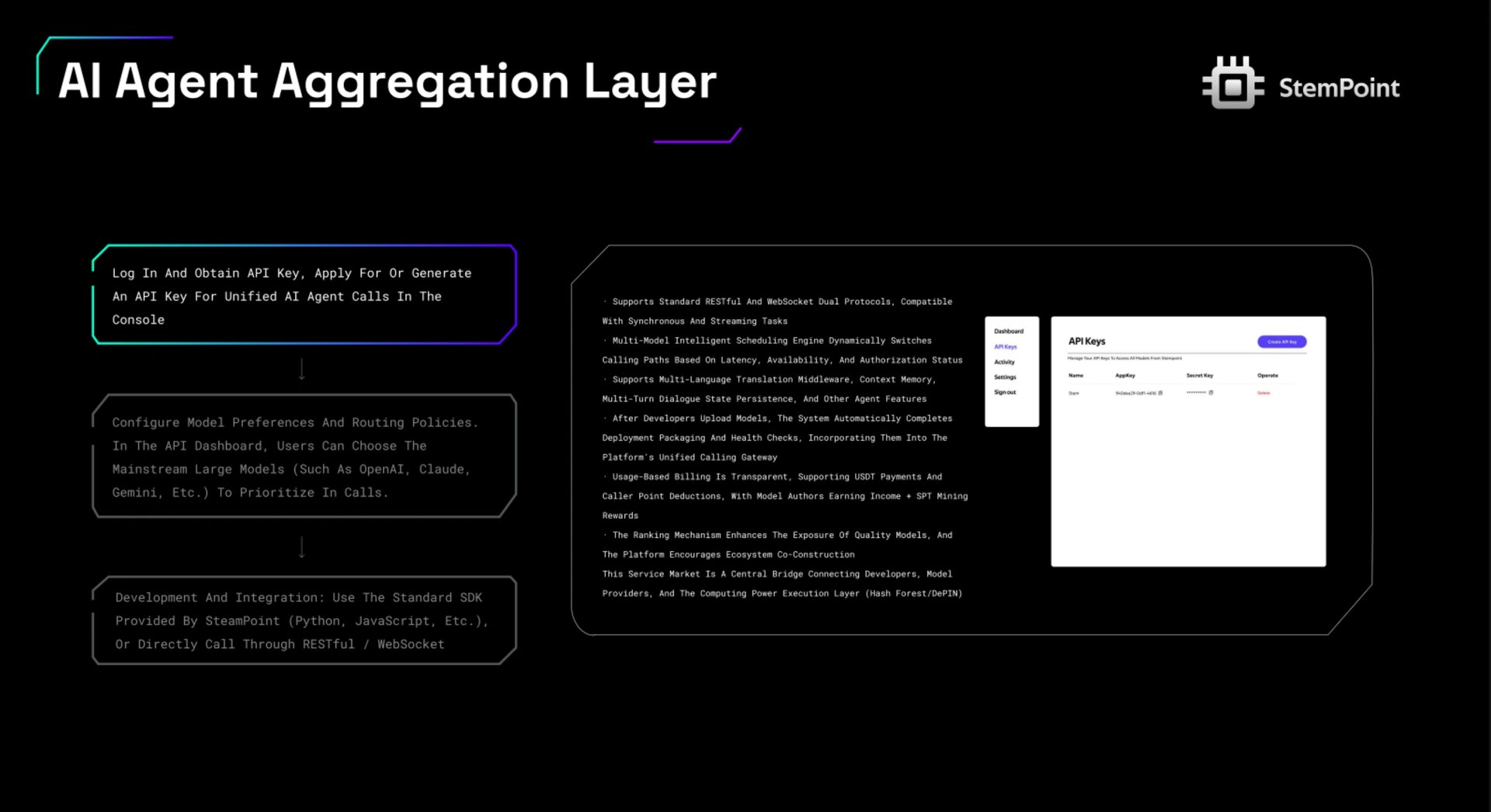

AI Agent Aggregation Layer

A unified API that connects multiple foundation models (e.g., OpenAI, Claude, Gemini) with dynamic routing, translation, and memory features. It enables developers to build AI applications efficiently through a single SDK. [9]

AI Compute RWA (Real-World Assets)

Tokenizes GPU cluster ownership and income rights on-chain, allowing transparent accounting, revenue distribution, and secondary market liquidity. [10]

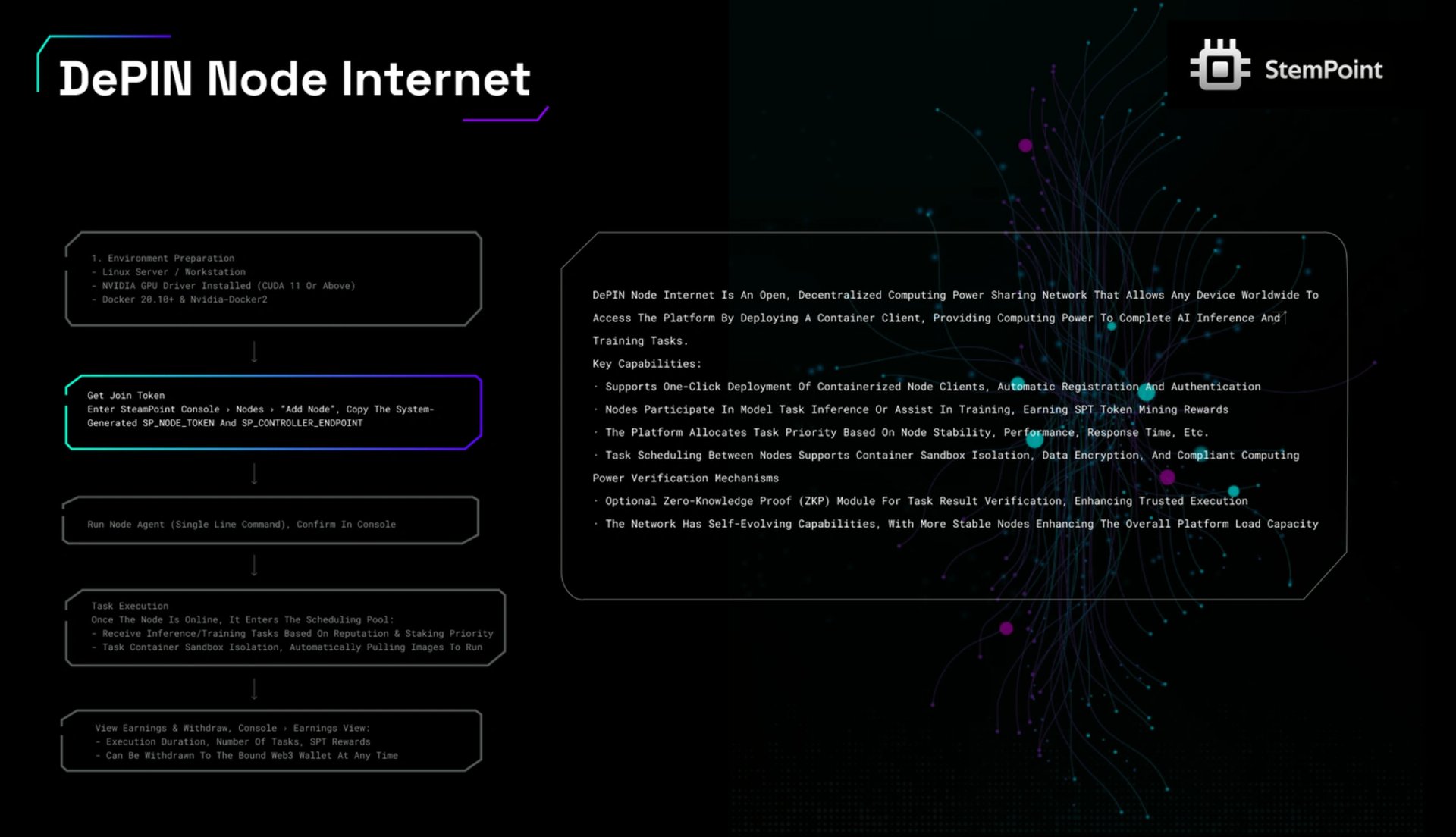

DePIN Node Network

A decentralized compute network where participants contribute GPU resources via containerized clients. Nodes execute AI tasks securely and receive tokenized rewards, ensuring scalable, verifiable, and distributed computing. [11]

Edge AI Hardware Shared Mining

Connects edge devices such as robots and NPUs to perform lightweight AI computations under privacy-preserving conditions, expanding data and compute capacity at the network edge. [12]

Use Cases

- AI Model Training: High-performance, distributed training of large-scale AI models using the combined power of the Hash Forest and the DePIN network.

- AI Model Inference: Running scalable and cost-effective inference tasks for deployed AI applications that require real-time or batch processing.

- AI Agent Development: Building complex AI agents that require persistent context memory and access to multiple foundational models through a single, unified interface.

- Algorithm Monetization: Wrapping custom or proprietary algorithms into callable, pay-per-use APIs that can be offered to other developers on the platform.

- GPU Hardware Monetization: Enabling individuals and data centers to earn passive income by contributing their underutilized GPU hardware to the DePIN Node Internet.

- Research and Development: Providing researchers and developers with on-demand access to powerful computing resources for experimenting with and fine-tuning custom AI models. [1] [2]

Tokenomics

The Stempoint ecosystem is powered by its native utility and governance token, $SPT and has a total supply of 10 billion. The token is integral to the platform's operations, creating the economic incentives that connect compute resource providers with AI application developers.

- Mining: 50%

- Foundation: 15%

- Team: 10%

- Community: 5%

- Fundraising: 20%

Token Utilities

- Compute Payments: Developers and enterprises use SPT to pay for GPU resources consumed during AI model training and inference on both the Hash Forest and the DePIN network, as well as for API calls to the AI Agent Aggregation Layer.

- Node Rewards: Compute providers who contribute their GPU hardware to the DePIN Node Internet are rewarded with SPT tokens for successfully completing tasks. This serves as the primary incentive for expanding the network's capacity.

Governance

The governance model for Stempoint is intended to allow stakeholders to influence the platform's strategic direction. By staking SPT tokens, holders gain voting rights on key proposals. This mechanism is designed to guide decisions related to computing power incentives, model integration priorities, and other platform parameters. The goal is to create a decentralized and participatory ecosystem where the community has a voice in the project's long-term evolution. [4] [2]

See something wrong?

The Agent Tokenization Platform (ATP):Build autonomous agents with the Agent Development Kit (ADK)